NextCloud 16 review

In May 2019, Linux Journal asked me to write a review and tutorial for NextCloud 16, and so I did. Then they shut down, so I decided to publish it here instead. Please use it!

In May 2019, Linux Journal asked me to write a review and tutorial for NextCloud 16, and so I did. Then they shut down, so I decided to publish it here instead. Please use it!

DNS is a complex system. What you have read so far is just the tip of… the tip of the iceberg. I hope it is enough, however, to help you decide if any of these “DNS hacks” could be useful for you, and show you the road to implement them:

Just a few notes on the purpose, structure of DNS, with some really basic configuration procedures and tricks for DNS on Linux

Static websites are great. Hugo is a really great static website generator. Except for a few things (some not even Hugo’s fault!).

Did you ever cancel by mistake a post on some website, only to find out that you had no other copy of it anywhere, not even in Google’s cache? I did. Here is how I recovered it.

A few tips and tricks, from a thread in the Fedora Users mailing list, on how to “to digitize several hundred music CDs” under any Gnu/Linux distribution

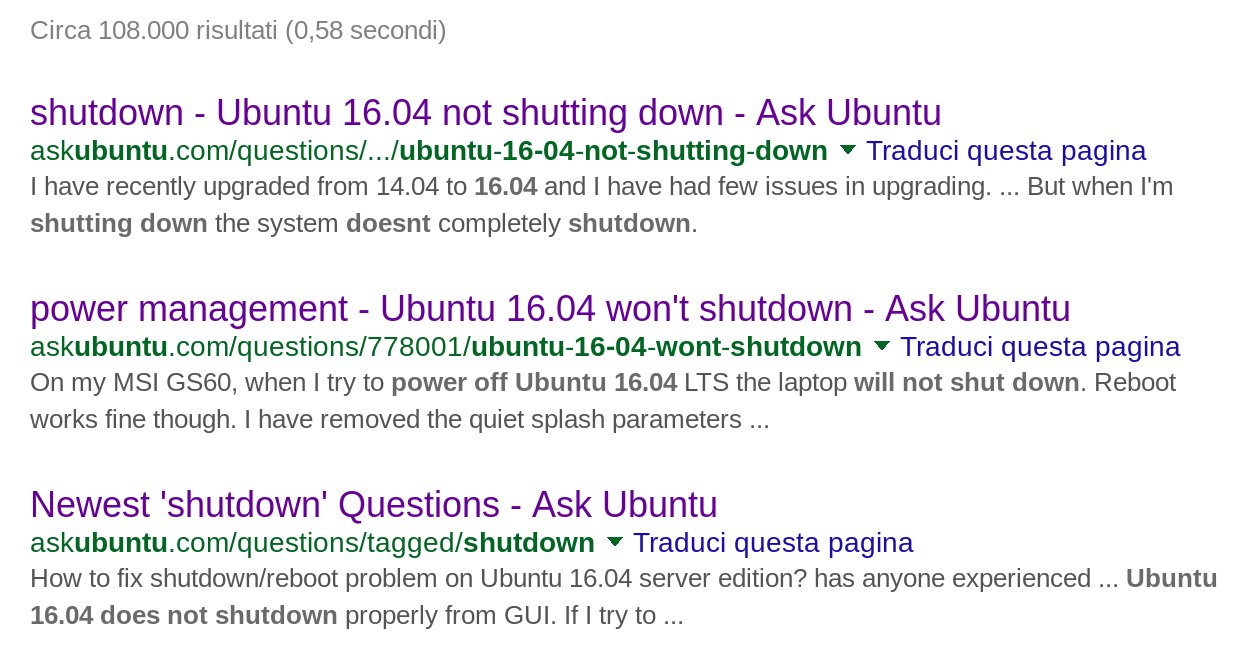

update 2016/07/01 6pm: SOLVED. Details here (but DO read anyway the whole post, because the cause of this problem on your computer may still be one of those below!)

Owners of websites that remained frozen for a year or more, because the _projects _they represent also were closed, or greatly slowed down their activity, face a big problem when those projects restart: updating the website without losing all its old content is really, really hard, if not impossible. Here is how I faced this problem with an old Drupal website, with an approach that can work on many other websites running off some database.

I just finished to read a copy of “CentOS System Administration Essentials”, written by Andrew Mallett, which I got from the editor for review. Here is what I found.